Metrics, although necessary, can easily infuence behavior in undesired ways. They can’t be taken lightly, and I would never recommend limiting the number of tracked metrics to just a handful. But I found these 4 learning metrics managers like, because they are likely to trigger productive discussions about your learning solution’s deployment and impact.

Butts in seats

You still have to count them (photo: Beatrice Murch)

While we all have heard a thousand times that metrics have to move beyond attendance, many good conversations start with attendance statistics. Learning effectiveness is useless if it didn’t reach enough or the right people. Avoid bringing just a number per location: good attendance tables -including demographic components such as geography, group, role and seniority- lead to good conversations about the potential of a learning solution to drive change, its operational challenges, budget and milestone reviews.

Smile sheets

Moving on to Kirkpatrick’s level 1. So easy to dismiss but so important in order to understand the quality of the experience as a crucial enabler of learning. I would, however, avoid the actual smileys and go for the business-oriented type of survey that managers are used to see elsewhere in the business. Specifically, using a 5-point Likert scale, I ask two questions: Were you satisfied with the learning solution? Would you recommend this learning solution to others?

To calculate the results, count the top answers, take away the bottom three, divide by the total number of answers and multiply by 100. The result ranges from -100 to 100; you want at least a positive number. What’s considered a “very good” result will depend on your specific business, but will probably be around 30.

For recommend, the result is called NPS, and for satisfaction, NSAT. Although I have seen these metrics exhibit consistent bias by culture and context (for example, the same learning solution is rated lower in Denmark than in Ireland, or by a group that has been recently reorganized) they are a great starting point for conversations with management because they demonstrate the ability of your solution (and by extension, your team) to engage employees.

Management feedback

Always capture and tabulate positive management feedback

Although qualitative and potentially subjective, management pays close attention to what their peers are saying about a learning solution. And when you hear a general manager telling you that he has heard great things about the new program, you have an ally that may help in geographies or divisions where the solution has not been deployed yet, or in organizations that remain skeptical. Whether it’s an email, a hallway conversation with permission to quote, or a brief mention during a staff meeting, these little feedback nuggets do carry enough weight to be brought to the table.

Follow-up activity

LMS progress and attendance are great. But they don’t tell us anything about impact outside the learning environment. However, with a bit of effort it is possible to obtain great additional data points that indicate impact.

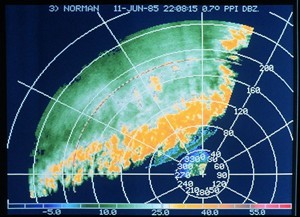

After delivery, your LMS is no longer a good radar. Keep track of your solution’s impact by adding other data points

For example, it is very likely that you are setting up job aids, portals, social media channels and other systems and resources that support the learning solution after it has been delivered. Because these tend to sit outside the LMS, they don’t get tracked. But it doesn’t take much effort to “plant” counters on job aids, so you know who and how often employees are using them. Or measure social chatter within the channels you created. Or measure traffic to a supporting portal. With a little help from your friends in IT, you can add simple telemetry that gathers evidence of new behavior in corporate systems. A few data points is all you need to show management how the learning solution is driving the desired impact.

That’s all?

Certainly not. Although you may notice that the above four points map loosely to Kirkpatrick’s levels, there are many other metrics I would use, including longitudinal studies. For example, after a career advancement program, I would track participants for two years to see if their promotion/transfer rate differs from a control group. My experience with these, however, is that they are not as interesting to management, because these metrics span more than one or two performance review periods or business cycles. Yes, metrics can easily influence behavior.